Why Different Social Media Companies Promote Different People

Have you noticed that social media services are used by different people?

Rich VCs talk about life and wealth on Twitter.

Fashionistas share their work on Instagram.

Your uncles and grandmas are on Facebook.

And your favourite video game streamer is on YouTube.

Why is that?

These social media services have millions of users. So they should house every community you can think of. So, it can’t because of the culture.

The answer has to do with the medium itself. Clothes are inherently visual. You can write an essay about them. But having a picture of it. Gives you all the information you need to know.

Instagram is one of the best places to share photos online. And one of the top places to share your fashion creations.

Professionals on Twitter

Why are their lots of writers on Twitter?

Because Twitter is designed mostly for writing. Even when you share memes. You still need to write something. 250 characters allow writers to compress their thoughts in less words.

If you want to explain more. You can create a thread about the idea. Which acts as a mini blog post.

Twitter is a great place to share things you read with numerous people. And you can give your two cents on the situation. While sharing it.

People tend to share their longer writing work on Twitter. Like blog posts on their website. Or their newsletter. And generate excitement over Twitter. This may help explain why Twitter bought out a Substack competitor Revue. So, they can integrate it into the app. As many newsletter writers, Twitter is their main acquisition tool.

This may also explain why the network has a lot more professionals. A lot of American coastal users use the service.

Many people mention that Twitter is there best networking tool. As they share ideas that people in their industry find valuable. And people use direct messages to start personal connections.

Twitter has a massive interest with white-collar professionals. And mainly city dwellers use that service.

This is why there is an oversampling of professions such as; journalists, VCs, programmers, marketers, writers, tech founders etc.

No blacksmiths, plumbers, or linesmen.

Journalism part has to do with twitter’s design. As Twitter is the place known to get breaking news. So, Twitter is the place to go when looking for material to write on.

Contrast to Facebook.

Friends and family with Facebook

Facebook is simple. Facebook is made for friends and family.

So it will make sense that your extended family is on there. But your friends may not be on there. Depending on your age. They probably left a while ago. And using other services like Snapchat and Instagram.

Facebook has bought and made more apps for you to talk to friends and family. Like WhatsApp and Facebook messenger. With these services, no one is creating long-form essays on there. As they are designed to for communication between people. Not explaining one’s thoughts of the world.

You can argue WhatsApp is a bit different. Because large groups can work as channels. Like a company communicating with its users. Or news company sharing what’s happening with their local community.

This may explain why Facebook is popular in the American Midwest and other less urban areas. As there is more of focus other areas of life. Rather than a career. (Hence Friends and family focus). Compared to the coastal parts of America. But these patterns show up in many countries.

Facebook has a wide appeal to many people. Mainly older folks.

YouTube, The Entertainment Medium

YouTube is the hub for entertainment. So it is a medium not use for communication. But to share ideas and make people laugh. There is a lack of direct messaging on YouTube. And communication is designed to be one to many. Think of YouTube comments.

Like Instagram YouTube tends to be more visual. Because of the longer time limit. People can experiment more. Like the video essay format. A traditional essay. With highly engaging visuals. To get hooked. But highbrow stuff like that is not as popular.

Lets plays. Or crazy challenges, celebrity vlogs etc. These tend to be highly visual and engaging. As lots of stuff happens in those videos. Great videos to watch when you are bored on a train ride.

Because of the amount of time allotted. A lot of creators have time in the video. To show their sponsorships. Which are basically ads. They also share their merchandise which you can buy.

Due to the size of YouTube. Almost everyone uses YouTube at some point. To a person who wants to learn about fashion. To a person who wants to learn about the periodic table. It’s all on YouTube.

The video just has to be entertaining enough before you click away.

Even education videos tend to be highly engaging. The one’s that are not. Get little views. Or split up into smaller videos. Like lectures.

But there is a growing genre of video that is not visual per se.

Which are podcasts.

Where you simply just watch the host and guest talking on video. I tend to use this a lot. I guess that is more visual than an audio-only podcast. As you get to see the faces of the guest and host. And all their expressions.

A podcast can help a YouTube creator produce much more videos. One hour YouTube podcast. Can be cut into 5 different clips. All linking back to the original podcast. The cost of production is low compared to other genres. Like travel or shopping hauls.

Also, can be less of a time sink on the creator's side. Compared to something like vlogging.

Design affects the medium

The design of the social network affects who uses the platforms. As it incentivises users to use the app in a certain way.

Instagram is for getting likes and followers. So eye-catching content is pushed.

Twitter is for getting retweets. So funny or outrageous content is pushed.

YouTube is for getting views. So outlandish content is pushed.

Because of that:

People with very visual hobbies will get more traction on Instagram.

People who have controversial opinions. Do well on Twitter.

People who are entertaining. Do well on YouTube.

Which self-selects for people with certain personalities and interests. That is suitable for the platform.

All that explains why people thrive of different platforms. And you view your favourite creators on different mediums.

If you liked this article. Sign up to my mailing list. Where write more stuff like this.

How to extract currency related info from text

I was scrolling through Reddit and a user asked how to extract currency-related text in news headlines.

This is the question:

Hi, I'm new to this group. I'm trying to extract currency related entities in news headlines. I also want to extend it to a web app to highlight the captured entities. For example the sentence "Company XYZ gained $100 million in revenue in Q2". I want to highlight [$100 million] in the headline. Which library can be used to achieve such outcomes? Also note since this is news headlines $ maybe replaced with USD, in that case I would like to highlight [USD 100 million].

While I did not do this before. I have experience scraping text from websites. And the problem looks simple enough that would likely require basic NLP.

So, did a few google searches and found many popular libraries that do just that.

Using spaCy to extract monetary information from text

In this blog post, I’m going to show you how to extract currency info text from data.

I’m going to take this headline I found from google:

23andMe Goes Public as $3.5 Billion Company With Branson Aid

Now by using a few lines of the NLP library of Spacy. We extract the currency related text.

The code was adapted from this stack overflow answer

import spacy nlp = spacy.load("en_core_web_sm")doc = nlp('23andMe Goes Public as $3.5 Billion Company With Branson Aid') extracted_text = [ent.text for ent in doc.ents if ent.label_ == 'MONEY']print(extracted_text) ['$3.5 Billion']

With only a few lines of code, we were able to extract the financial information.

You will need to have extra code when dealing with multiple headlines. Like storing them a list. And having a for loop doing the extraction of the text.

Spacy is a great library for getting things done with NLP. I don’t consider myself expert in NLP. But you should check it out.

The code is taking advantage of spaCy’s named entities.

From the docs:

A named entity is a “real-world object” that’s assigned a name – for example, a person, a country, a product or a book title. spaCy can recognize various types of named entities in a document, by asking the model for a prediction.

The named entities have annotations which we’re accessing with the code. By filtering the entities to have money type only. We make sure that we are extracting the financial information of the headline.

How to replace currency symbol with currency abbreviation.

As we can see Spacy did a great job extracting the wanted information. So we did the main task.

In the question, the person needed help with replacing the dollar sign with USD. And included highlighting the financial information.

The replacement of the dollar sign is easy. As this can be done with native python functions.

extracted_text[0].replace('$', 'USD ') USD 3.5 BillionNow we have replaced the symbol with the dollar abbreviation. This can be done with other currencies that you want.

Highlighting selected text in data

The highlighting of the text moves away from processing data. And more of the realm of web development.

The highlighting of the text. Would require adjusting the person’s web app. To have some extra HTML and CSS attributes.

While I don’t have the know-how to do that.

I can point you to some directions:

Highlight Searched text on a page with just Javascript

https://stackoverflow.com/questions/8644428/how-to-highlight-text-using-javascript

Hopefully, this blog post has helped your situation. And on your way into completing your project.

If you want more stuff like this. Then checkout my mailing list. Where I solve many of your problems straight from your inbox.

Can Auditable AI improve fairness in models?

Not unique but very useful

I was reading an article on Wired about the need for auditable AI. Which would be third party software evaluating bias in AI systems. While it sounded like a good idea. But I couldn’t help think it’s already been done before. With Google’s what if tool.

The author explained that the data can be tested. By checking how the AI responds by changing some of the variables. For example, if the AI judges if someone should get a loan. Then what the audit would do. Is check that does the race effect getting the loan. Or gender, etc. So if a person with the same income but different gender. Denied a loan. Then we know the AI harbours some bias.

But the author makes it sound that it’s very unique or never been done before. But Google’s ML tools already have something like this. So the creator of the AI can already audit the AI themselves.

But there is power by using a third party. That third party can publish a report publicly. And also won't be hiding data that is unfavourable to the AI. So a third party can keep the creator of the AI more accountable. Then doing the audit on your own.

Practically how will this work?

Third parties with auditable AI

We know that not all AI will need to be audited. Your cat vs dogs does not need to be audited.

The author said this will be for high stakes AI. Like medical decisions. Or justice and criminal. Hiring. Etc. This makes sense.

But for this to work seems like the companies will need to buy-in. For example, if an AI company decides to do an audit and find out their AI is seriously flawed. And no one wants their product because of it. Then companies are less likely to do so.

Maybe first the tech companies should have some type of industry regulator. That makes standards on how to audit AI. And goals for one to achieve. Government initiative will be nice. But I don’t know if the government has the know-how at the moment. To create regulation like this.

Auditing the AI. Will require domain knowledge.

The variables needed to change in the loan application. Is different for AI that decides if a patient should get medicine. The person or team doing the auditing. Will need to know what they are testing. The loan application AI can be audited for racism or sexism. The medicine AI can be audited for certain symptoms or previous diseases. But domain knowledge is highly needed.

For the medicine example. A doctor is very likely to part of the auditing team.

On the technical side, you may want to ask the creators to add extra code to make auditing easier. Like some type of API that sends results to the auditable AI. Creating an auditable AI for every separate project. Will get bogged down fast. Some type of formal standard will be needed to make life easier for the auditor and creator of the AI.

This auditable AI idea sounds a bit like pen testing in the cybersecurity world. As your stress testing (ethically) the systems. In this context we are stress testing how the AI makes a decision. Technically you can use this same idea. For testing adversarial attacks on the AI. But that is a separate issue entirely.

From there it may be possible to create a standard framework. On how one will test AI. But this depends on the domain of the AI. Like I said above. Because of that, it may not scale as well. Or likely the standards will need to be limited. So, it can cover most auditable AI situations.

Common questions for when auditing ones AI:

How to identify important features relating to the decision?

Which features could be classed as discrimination if a decision is based on them?

i.e. Gender, race, age

How to make sure the AI does not contain any hidden bias?

And so on.

It may be possible that auditable AI. Can be done by some type of industry board. So it can act as its regulator. So they can set their frameworks on how to craft auditable AI. With people with domain knowledge. And people who are designing the audited AI. To keep those ideas and metrics in mind. When developing the AI in the first place.

The audible AI by a third-party group. Could work as some type of oversight board. Or regulator. Before the important AI gets released to the public.

It is a good idea, to do regular audits on the AI. After the release. As new data would have been incorporated into the AI. Which may affect the fairness of the AI.

Auditable AI is good step, but not the only step

I think most of the value comes the new frameworks overseeing how we implement AI. In many important areas. Auditable AI is simply a tool. To help with that problem.

In some places, auditable AI tools will likely be internal. I can’t imagine the military opening up their AI tools. To the public. But it will be useful for the army that AI can make good decisions. Like working out what triggers a drone to label an object and enemy target.

Auditable AI may simply be a tool for debugging AI. Which is a great thing don’t get me wrong. Something that we all need. But may not be earth-shattering.

And what many people find out. About dealing with large corporations or bodies like governments. That they may drop your report. And continue what they were doing anyway. A company saying it opening its AI. For third party scrutiny is great. PR wise. But will they be willing to make the hard decisions? When the auditable AI tells you. Your AI has a major bias. And fixing that will cause a serious drop in revenue.

Will the company act?

Auditable AI is a great tool that we should develop and look into. But it will not solve all our ethical problems with AI.

Forecasting time series data with outliers, How I failed

How I got started

I was researching how to deal with outliers for time series data. And it led me to a big rabbit hole.

I noticed that there is a lot of material on how to detect outliers. (anomaly detection). But not much information out there to deal with them afterwards.

I want to detect the outliers for forecasting data. The idea is that you may want to deal with data. With heavy shocks. Think of airline industries after massive lockdowns.

For normal data. There is numerous information about dealing and removing outliers. Like values are in furthers ranges. Then you can remove them. Stuff like z-score etc.

When starting on the project. I want to use time-series data. Which I knew had a major shock. So I choose a dataset. of air passengers in Europe. Due to a major drop in demand in 2020. But while I used it before. I noticed that it was very difficult to select the data I want. So I eventually gave up.

That’s Failure 1

So I thought, I will try to create a fake dataset. That can act like time-series data. So I settled with a sine wave. As this data has a clear trend. (going up and down). Going across time. So I thought this should be an easy thing to forecast.

As I want to learn about the outliers. I manually edited them myself into the dataset.

Finding the outliers

So now work begins detecting the outliers. This took more longer than expected. As I was scrolling through a few blogs posts. Working out how to detect anomalies. I settled on this medium article. While the article was good. I struggled to adapt it to my project.

I noticed the first major roadblock holding me back. I was opting to use NumPy only. As this was used to produce the sine wave data. I thought I can use separate lists and plot them. This made life more difficult than it should be. Dealing with the different shapes of the arrays and whatnot. So I started to use pandas.

That’s Failure 2

Now started making more progress. As it was starting to more closely resemble the code from the tutorial.

For the anomaly detection, I used Isolation forest. As that was a popular technique I saw thrown around. For anomaly detection. And I haven’t used isolation forest before.

The idea was to try multiple techniques and compare them. But taking too much time. The tutorial had the option of isolation forest, so I did that.

There was a bit of an issue plotting the data with markers. But it eventually got solved. By adding a customisation for copying a dataset. For data visualisation.

While it was as able to spot outliers I added. It got a few false positives. I don’t know why.

After I was able to plot the outliers correctly. I decided to work on the forecasting model.

Forecasting the data

I got the code for the forecasting model here. It was not long before I had some issues.

First, I had to fix a minor issue. With outdated import code. By working the new function name. From the auto-suggest feature on Google Colab. I was able to fix it.

I ran into the issue of the data not being stationary. I thought that is something I could skip. With the cost of the model having lower results. But I was wrong. statsmodels could not fit the data. If the data was stationary.

So, I went back to the code as I was plotting the data. And starting work on differentiation. Luckily I already had experience doing this. Just had to get a refresher reading a blog post.

A major thing I noticed that a normal pandas difference did not make the data stationary. So I had to add customisations. Unexpectedly a double difference function. With the frequency set, 20 worked. The sine wave frequency was set at 5. But the same number did not turn the data stationary.

normal_series_diff = normal_df['value'].diff().diff(20).dropna()

After that, I was able to fit the data into the model.

Then I decided to forecast the model. Using the data I train on as a measure. Then statsmodels was giving me Convergence Warnings. And did not print the expected vs predicted values correctly.

Printing it like this:

predicted=-0.000000, expected=0.000000predicted=0.000000, expected=-0.000000

But I was still able to get the graph though:

While the RMSE was broken.

I decided to move on to forecasting the data with the outliers. I did the same thing as above. But this it gave no issues nor warnings.

Example:

predicted=-0.013133, expected=-4.000000

predicted=1.574975, expected=4.000000

As we can see the forecasting looks a bit odd.

A major thing I did not do was changing the model parameters. Nor did I do autocorrelation. To work that out. I wanted to move fast. But in hindsight that may have been wrong.

That’s failure number 4

Now I wanted to forecast the data. With the outliers removed.

This was the code to do that:

outliers_removed.drop(outliers_removed[outliers_removed['anomaly2'] == -1].index, inplace=True)And this is what it looked like:

And I did differentiation with data. So make it stationary. Like I did earlier.

This is the result of the model:

Probably the best one.

With statsmodels printing out real values:

predicted=-0.061991, expected=-0.339266predicted=-0.236140, expected=0.339266predicted=-0.170159, expected=0.057537predicted=0.031753, expected=0.079192predicted=0.095567, expected=0.093096predicted=0.104848, expected=0.097887The aftermath

After all this. This raises more questions than answers. Why did the dataset with missing data? Do the best? While the normal data struggled to get forecasted.

What should one do with outliers in time series data?

Should you just remove it? Like I did. Or try to smooth it out somehow.

How should one create a fake dataset for time series? When I googled the question. People just use NumPy random function. Which I know works. For testing out a toy dataset.

But horrible for forecasting. Due to the lack of any patterns in the data.

I know this because I did a project which I used a randomly generated dataset. Used an RNN to forecast. Due struggled because the data was random.

If you want more failures like these 😛. Check out my mailing list

How to avoid shiny object syndrome

If you’re a programmer who has suffered from this issue before. This set of events may happen to way too often. You are working on a project. Then just found out on Reddit. There is an interesting library that you can check out. Then you spend your whole day reading up on documentation. And trying out the library. But by the end of the day. You noticed that your original project. Has made little progress.

If that’s you.

You suffer from shiny object syndrome.

This blog post should be the antidote.

Why is shiny object syndrome dangerous?

You probably know why. You spend time running around in circles. Trying the next best thing. While you have little to show for it.

Shiny object syndrome reduces progress on many of your projects. Because of the time being spend switching between various projects. And starting them from scratch.

Imagine constructing a building which you only decide halfway to stop and make another one. Therefore, you need to disassemble and move your equipment. To the next construction zone.

All the time and effort could have been spent making even more progress with the original building.

We forget when starting a new project. That we need to spend time transitioning into the next project. That means researching how to start the next project. Refreshing your workspace for the next project. If your new project is not similar to your original project. Then you may have to learn new skills associated with the following project.

For example, if you’re a web developer then you decide to work on a blockchain project. Most likely, you need to spend time learning the ins and outs of blockchain technology. Which will take a lot of time.

Because of this, there is a lot of risks. Jumping from one project to another.

If you want to avoid this fate. Then I can recommend you some courses of actions below.

How to stop shiny object syndrome?

Wait a minute and breath.

Many times you start a project. After reading a great blog of some person’s project. And you think to yourself “I want to do that!”. Then you start googling around. Of the resources that the author used. Getting ready for a new awesome project.

Don’t get me wrong. Getting excited is a great emotion. But leads you spinning your wheels sometimes.

Instead of instantly creating a project based on your excitement. I would recommend writing your ideas down in a notebook. Ideally an online one like Evernote. So, you always have access to it.

This helps you get back to the task at hand. Then checking the idea when some time passes. Removes the euphoria of the moment. So, you can see your idea with rational eyes. And you may notice that idea was not that good in the first place. From there you avoided wasted time on a project. That would have been a dead end.

If you still think the idea is still good. Then start adding more details and plans.

Timebox space to explore new ideas

New technologies are awesome. Which may be part of the reason you’re in the community in the first place. But learning about new technologies take a lot of time. So what you may want to do is set aside time during your day or week. To explore the technology, you're most passionate about. This may help you explore your interests. Without your main task for the week or day. Being derailed. And this exploring can help think about your next project. When you are ready.

Have a plan to ship #BuildInpublic

Making sure you have a deliverable is a great way to have accountability. Having something like this will make you more likely to stay the course. If you want to do this on hard mode. Make it a public statement. So everybody can expect a product to be shipped. You are WAY less likely to abandon ship if people are expecting a product from you.

Once you start getting feedback from an audience. You may get an extra spike of motivation. To keep ongoing.

A term you should do more research on is Learn in public. (Interchangeably called build in public) This is where you share information about your project start to finish. The reason why this is good. Other than the reason above. That it will help you get more eyeballs on your project. Giving it more chance of succeeding. The feedback from the public should help make the project better. As now you have other people giving you ways to improve. Learning in public can help your next project. If your project is interesting, then they may stick around to see what you have in store.

Does the shiny object match your goals?

Maybe you have a general direction. Where you want some of your projects to go. If that’s the case you can ask. Does the shiny object achieve my goals? Or you can ask will this new technology help me do my job better. Do you think the new project will reduce the amount of time you need to work? Or can you get more work done in the same amount of time?

Asking precisely “How will this help me?” Should help you avoid. Going into a cul-de-sac.

Conclusion

Now I have given you a few ways to battle shiny object syndrome. And now you can finish your project through and through. Without jumping onto the next project that peaks your interests.

Gatekeepers still exist in the internet age

The Netflix reject to YouTube superstar

I was reading an interesting indie hacker post. About the growth of Andrew Schulz. A very funny American comedian. The indie hacker post documented his journey from a Netflix reject. To a popular social media comedian racking up millions of views per video.

The indie hacker post starts with his first custom special. He filmed his own special, but Netflix did not want it. So he put the video up on YouTube. He got some nice reception on it. But let’s be honest lots of people are not going to watch a one and half-hour comedy special on YouTube.

So he tried more stuff.

He started getting highlights from his stand-ups and started putting them on YouTube. People started to watch them a lot. A type of video that was gaining traction for him was roasts. So, each night he was doing stand up. He would try to roast a few people in the audience. So he can add that to YouTube. So, every week he has content.

While he was doing that was gaining more and more views. Then a turning point happened when coronavirus hit. As all comics can’t do stand-up acts anymore. In great creativity, Andrew started a late-night style show. Where he jokes about topical events. Compared to many late night tv hosts which struggled without an audience. As the audience did not act as a laugh track. And had to appease network executives. Their jokes were soft. Andrew Schulz did not hold back. And roasted everything. Nothing was too far for him.

This show led to raking in millions of views.

This great story that we all learnt about building an audience. If a traditional gatekeeper does not allow you to get in.

Then you need to make your own audience.

While this is a great lesson.

Online Gatekeepers

I would like to add a caveat. YouTube and Instagram are still gatekeepers. If you say lots of horrendous stuff you will get kicked out. So the lesson may not be don’t rely on one gatekeeper for success. Try multiple. A bit like diversifying your portfolio. You want to be invested in many things so if one asset doesn’t do well. Then the rest will.

A great lesson from Schulz is that you tailor your content to the platform. This is the ground rules when the gatekeepers let you in. His custom special was great. But people don’t watch 2-hour videos on YouTube. So he moved to doing clips of less than 5 minutes. And gained more success. When on Instagram. He starts his intro with and funny or interesting hook. Then asks the watcher to turn their phone around. This is a cool way to get your audience’s attention and keep them. His topical roast show can’t work well for vertical video. As many of the pictures on the side are the joke.

This could not work on vertical video

In that article, it said “Schulz realised he was going to have to make it alone. So he started analysing how people actually watch comedy.” So you go to places where people are actually watching comedy. And tailor your content.

Many creators mention this. You want to move your audience from an open platform to a platform you own. Normally email. Due to the fact, you don’t have control of the platform. If you have a million Twitter followers. But you get banned you have no recourse. And it will be almost impossible to contact them again. So, you much leverage platforms for your own benefit. Maybe create a private community where you and your audience can share more work.

This is the cost of dealing with other gatekeepers. You are following their rules. And they can kick you out for any reason. It’s their house. Their rules.

This is why you want to deal with many gatekeepers, so you are not finished when one kicks you out.

If you are a sane person. You don’t need to worry about that. What’s likely going to happen instead of kicking you out. Is toggling your traffic. And tell you, you need to buy ads on our platform. (Cough cough Facebook.)

If you don’t want that happening to you. Have your own place like a website. And a mailing list. It’s a pretty raw deal to pay to access your own audience.

How to normalise columns separately or together

Normalization is a standard practice for machine learning. Helps improve results. By making sure your shares the same scale.

How should you normalise those features?

Should do them all at the same time?

Normalise the columns separately?

This a question you may face if your dataset has a lot of features.

So what should you do?

The reason why I’m writing this is because a reddit user asked this question.

Is it better to normalize all my data by the same factor or normalize each feature/column separately.

Example: I am doing a stock prediction model that takes in price and volume. A stock like Apple has millions of shares traded per day while the price is in the hundreds. So normalizing my entire dataset would still make the price values incredibly small compared to volume. Does that matter? Or should I normalize each column separately?

This is a valid question. And something you may be wondering as well.

In this redditors case. I will separate the columns separately. As I think the difference between the volume and price is too big. Can’t imagine having value with the difference between 1 million volumes and $40.

But in your case, it may not be needed. If the highest value in your dataset is around a hundred. And your lowest one is 10. Then I think that’s fine. And you can normalise the whole dataset.

They are other reasons why you want to normalise columns separately.

Maybe you don’t want to normalise all of your columns. Because one of your columns certain values are very important. Like one shot encoding. Where having two values is very important.

Maybe you can’t practically do so. Because a couple of columns are text data types.

To be fair, not normalising all of your dataset is not a big issue. If your dataset is normalised, then it may not matter if your values 0000.1. As the values can was be converted back after putting it through the model. But it may be more easier to normalize specific columns. Rather than whole dataset. As the values maybe easier to understand. And you don’t feel the other columns would suffer much if they are not normalized either.

Like most data science. The answer to all this is test and find out.

If you try normalizing separate columns. Then the whole dataset. See which results give you better results. Then run with that one.

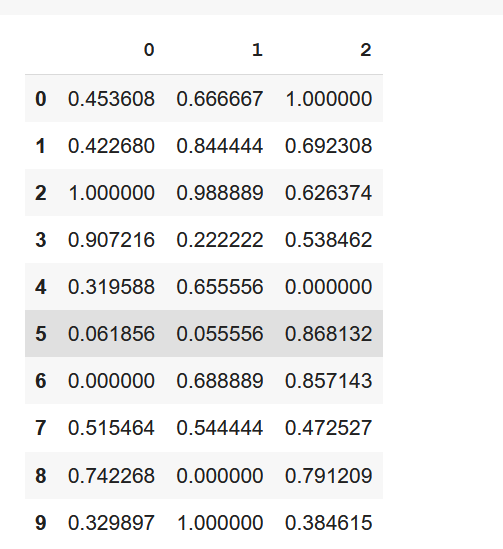

Whatever your answer. I will show you to normalise between the different options.

How to normalize one column?

For this, we use the sklearn library. Using the pre-processing functions

This is where we use MinMaxScaler.

import pandas as pdfrom sklearn import preprocessingdata = {'nums': [5, 64, 11, 59, 58, 19, 52, -4, 46, 31, 17, 22, 92]}df = pd.DataFrame(data)x = df #save dataframe in new variable min_max_scaler = preprocessing.MinMaxScaler() # Create variable for min max scaler functionx_scaled = min_max_scaler.fit_transform(x) # transfrom the data to fit the functiondf = pd.DataFrame(x_scaled) # save the data into a dataframe

Result

Now your column is now normalized. You may want to rename the column to the original name. As doing this sometimes removes the column name.

Normalizing multiple columns

Now if you want to normalize many columns. Then you don’t need to do much extra. To do this you want to create a subset of columns. You want to normalise.

data = {'nums_1': [39,36,92,83,26,1,-5,45,67,27], 'nums_2': [57,73,86,17,56,2,59,46,-3,87], 'nums_3': [97,69,63,55,6,85,84,49,78,41]}df = pd.DataFrame(data) cols_to_norm = ['nums_2','nums_3']x = df[cols_to_norm] min_max_scaler = preprocessing.MinMaxScaler()x_scaled = min_max_scaler.fit_transform(x)df = pd.DataFrame(x_scaled)

You can merge the columns back into the original dataframe if you want to.

Normalising the whole dataset

This is the simplest one. And probably something you already do.

Similar to the normalisation of the first column. We just use the whole data frame instead.

x = dfmin_max_scaler = preprocessing.MinMaxScaler()x_scaled = min_max_scaler.fit_transform(x)df_normalized = pd.DataFrame(x_scaled)Now we have normalised a dataframe.

Now you can go on your merry way.

Social Media isn't just a reflection of human nature

Social media isn't just a reflection of human nature. It's a force that defines human nature, through incentives baked into the way products are designed.

The title is from the book No Filter. Where the author argues that Instagram not a neutral piece of technology. But a tool that provides incentives to users to use the product in a certain way. This line reminds me of Neil Postman’s Amusing Ourselves to Death. Where Neil Postman argued that a piece of media changes how the user sees the world. In the book, he had the example of television. In which TV is a visual medium. So things are done on TV. Where done with the express purpose to entertain. Things on TV are supposed to be visually nice or shocking. Like good looking TV presenters. Or explosions in movies.

This reminds me of a YouTube video I watched. Talking about beautiful people who dominate the music industry. And less good-looking people are locked out. Even though they may be more talented. The Youtuber explained before music videos. Most musicians did not conform to social standards of beauty at the time. Also, they tended to be rock and punk bands. Which tended to be anti-establishment.

But music videos started to be introduced with MTV. A lot more production started to be put into music videos. So storylines inside the music videos. Stunts. And whatnot. After that pretty soon the music directors and industry men. Worked out if they added attractive people on the front of the cover. Then the music started to do well. So then the process started. Where good looking people. Men or female were chosen. To do music videos. And music in general. (This affected females more than males I might add.)

This is why if you look at the top acts right now. Especially female. They tend to be good looking. A good YouTube comment in the video said. “You don’t see any ugly female musicians but you may see male ones”. So you could argue there is some sexism baked into this system. Where a female is more judged on her appearance compared to her male counterparts.

Back to the title at hand. Social media changes human nature because of the incentives. Like the TV example, I gave earlier. People will start to morph their behaviour to fit the mould of the medium. Instagram is a place to show off social status. So people will do things that look like they have a high social status. Like showing off wealth. Travelling around the world. Being beautiful. Instagram is designed to be visual. So people put in a lot of work editing photos. Making sure the backdrop is good. The lighting in the photo is good. To have a great photo.

YouTube is a place where watch time. Is rated highly. So people do around 20-minute videos. With a few emotion spikes here and there. To keep the watcher hooked. Due to YouTube’s design. You choose from a selection of tons of videos. So YouTubers have eye-catching thumbnail and title. To make the user click onto the video.

As people try to please the various algorithms of these social media sites. They become less of a neutral force. And more of a way that the services push people into one direction. To view content. How Neil postman explained with the TV. Which people started to fit content for TV. More focus on visuals and entertainment. Rather than detail and thoroughness.

For example, on Twitter. You can’t write essays on there. So short statements are necessary. In some ways short statements are good. As they force the writer to compress their thoughts. Into its most bare components. One of the advantages. Smart people distil books worth of knowledge into a tweet. Think of James Clear and Naval.

But if you want to bring attention to yourself. Outrage is the way to go. Due to Twitter’s design. People can share and comment very easily. Making the tweet go viral. Outrage works because it’s hard to make nuanced statements. Due to the 240-character limit. So its easier to make a statement thats not true. And let people fill in the gaps. Or try to correct you. This is why many Twitter users say you want to add a spelling mistake to your tweets. So people comment on your post. When they try to correct you. Because outrage is so helpful. Therefore, Twitter can be known to have a bad culture around it. As people use these tricks to bait people into talking about them.

This is not to say outrage is only Twitter. I think I talked about this issue in other places. YouTube for the longest time. And issues of conspiracy theory videos hitting the suggestion feed. Facebook still has an issue with outrage content.

But while they have their issues. I would call these a negative force. The social media services I say are a net positive. But users do need to take extra actions to make social media a net positive. Digital minimalism by Cal Newport talks about this. Some services like YouTube are highly on the net positive side. But has issues like being a serious tool for distraction. I’m fully convinced that social media is not all negative. Contrary to what people think.

People say Twitter is a great networking tool. People use Facebook to keep up with friends and family. Instagram is a great way to show off your hobbies. You can argue that they may better ways to keep up with friends and family compared to Facebook. But it does the job.

But I think we should avoid moral panic. Yes, these tools have issues, and they need to be fixed. But we should put some responsibilities on the individual to use them correctly.

Like disabling notifications. Having limited times on their apps. If they have an issue with outrage bait. Unsubscribe to any content that produces and shares that content.

I learned that if we are more mindful on how to use social media. The experience becomes a lot more pleasant.

How to check if your model has a data problem

A couple of times you run your model. And the results are mediocre. While it may be a problem with the model itself. It may also be a problem with your data. If you suspect your model is underperforming because of data.

You can try a few things.

Do you have enough data?

Make sure you have enough data. This is yours to call. This will depend on what type of data you are dealing with. For example, images around 100 can be just enough. Before you add image augmentation. Tabular data, maybe a bit more.

Josh Brownlee mentions that:

The amount of data required for machine learning depends on many factors, such as:

The complexity of the problem, nominally the unknown underlying function that best relates your input variables to the output variable.

The complexity of the learning algorithm, nominally the algorithm used to inductively learn the unknown underlying mapping function from specific examples.

Do you have a balanced dataset?

Does your data consist of one main class? If so, you may want to change that. Having data like that skews the results one way. The model will struggle to learn about other classes. Adding more data from the other classes can help. If you have the issue above.

You could try under-sampling. Which means deleting data points from the majority class. On the flip side, you try can oversampling. Which means simply copying the minority class for more samples.

If your data has a few outliers, you may want to get rid of them. This can be done using Z-score or IQR.

Is your data actually good?

I’m talking about rookie mistakes like blank rows, missing numbers. Which can be fixed with a few pandas operations. Because they tend to be so small, they are easy to miss.

Assuming you are using pandas you can get rid of N/A. You can use the df.dropna().

Do you need some of the columns in your dataset? If not drop them. For example, if you are analysing house prices. Then data like the name of the resident is not a good factor for the analysis. Another example if you're analysing the weather of a certain area. Then dataset with 10 other areas is of no interest you.

To make life easier for yourself. If you are using pandas. Make sure the index is correct. To prevent headaches later on.

Check the data types of your columns. Because they may contain values of different data types. For example, if your column for DATE. Is a text data type. You may want to change that into a pandas date type. For later data manipulation.

Also, a couple of your values may have extra characters forcing them to be a different data type. For example, if one of your columns is a float data type. But one of the values looks like this [9.0??]. Then the value will count as a text data type. Giving you problems later on.

Features in your data

Your dataset may contain bad features. Features engineering will be needed to improve it.

You can start with feature selection. To extract the most useful features.

Do you have useless features like name and ID? If so remove them. That may help.

They are multiple techniques for feature selection. Like Univariate Selection, Recursive Feature Elimination, Principal Component Analysis.

Afterwards, you can try feature extraction. This is done by combining existing features into more useful ones. If you have domain knowledge then you can manually make your own features.

Do the feature scales make sense? For example, if one of your features is supposed to be in the 0 to 1 range. Then having a value that is 100. Means that it’s a mistake. That value will cause the data to skew one way. Due to it being an outlier.

Depending on your data. You can try one shot encoding. This is a great way to turn categorial data into numeric values. Which machine learning models like. You do this by splitting the categorical data into different columns. And a binary value is added to those columns.

Resources:

https://machinelearningmastery.com/discover-feature-engineering-how-to-engineer-features-and-how-to-get-good-at-it/

https://machinelearningmastery.com/feature-selection-with-real-and-categorical-data/

https://machinelearningmastery.com/feature-extraction-on-tabular-data/

https://towardsdatascience.com/feature-extraction-techniques-d619b56e31be

https://machinelearningmastery.com/data-preparation-for-machine-learning-7-day-mini-course/

How to convert non-stationary data into stationary for ARIMA model with python

If you’re dealing with any time series data. Then you may have heard of ARIMA. It may be the model you are trying to use right now to forecast your data. To use ARIMA (so any other forecasting model) you need to use stationary data.

What is non-stationary data?

Non-stationary simply means that your data has seasonal and trends effects. Which change the mean and variance. Which will affect the forecasting of the model. As consistency is important when using models. If the data has trends or seasonal effects then the data is less consistent. Which will affect the accuracy of the model.

Example dataset

Here is an example of some time series data:

This is the number of air passengers each month. In the United Kingdom. You can fetch the data here. From 2009 to 2019.

As we can see the data has strong seasonality. As people start to go on their summer holidays. And a tiny bump in the winter. To avoid the cold. If you like to use a forecasting model, then you need to change this into stationary data.

Differencing

Differencing is a popular method used to get rid of seasonality and trends. This is done by subtracting the current observation with the previous observation.

Assuming you are using pandas:

df_diff = df.diff().diff(12).dropna()This short line should do the job. Just make sure that your date is the index. If not you will get a few issues plotting the graph.

If you still want to keep your traditional index then simply create a new dataframe. Keeping the columns separated and shifting your numerical column.

diff_v2 = df['Passengers'].diff().diff(12).dropna()time_series = df['TIME']

df_diff_v2 = pd.concat([time_series, diff_v2], axis=1).reset_index().dropna()The concatenation produces NaN values. As the passengers series is shifted ahead compared to the time series. We use the dropna() function. To drop those rows.

df_diff_v2 = df_diff_v2.drop(columns=['index'])

ax = df_diff_v2.plot(x='TIME')ax.yaxis.set_major_formatter(mpl.ticker.StrMethodFormatter('{x:,.0f}'))This is here as we are dealing with large tick values. This is not need if your values are less than thousand.

Now your data can be used for your ARIMA model.

If you found this tutorial helpful, then. Check out the rest of the website. And sign up to my mailing list. To get more blog posts like this. And short essays relating to technology.

Resources:

https://www.quora.com/What-are-stationary-and-non-stationary-series

https://www.analyticsvidhya.com/blog/2018/09/non-stationary-time-series-python/

https://machinelearningmastery.com/remove-trends-seasonality-difference-transform-python/

https://towardsdatascience.com/hands-on-time-series-forecasting-with-python-d4cdcabf8aac