How to normalise columns separately or together

Normalization is a standard practice for machine learning. Helps improve results. By making sure your shares the same scale.

How should you normalise those features?

Should do them all at the same time?

Normalise the columns separately?

This a question you may face if your dataset has a lot of features.

So what should you do?

The reason why I’m writing this is because a reddit user asked this question.

Is it better to normalize all my data by the same factor or normalize each feature/column separately.

Example: I am doing a stock prediction model that takes in price and volume. A stock like Apple has millions of shares traded per day while the price is in the hundreds. So normalizing my entire dataset would still make the price values incredibly small compared to volume. Does that matter? Or should I normalize each column separately?

This is a valid question. And something you may be wondering as well.

In this redditors case. I will separate the columns separately. As I think the difference between the volume and price is too big. Can’t imagine having value with the difference between 1 million volumes and $40.

But in your case, it may not be needed. If the highest value in your dataset is around a hundred. And your lowest one is 10. Then I think that’s fine. And you can normalise the whole dataset.

They are other reasons why you want to normalise columns separately.

Maybe you don’t want to normalise all of your columns. Because one of your columns certain values are very important. Like one shot encoding. Where having two values is very important.

Maybe you can’t practically do so. Because a couple of columns are text data types.

To be fair, not normalising all of your dataset is not a big issue. If your dataset is normalised, then it may not matter if your values 0000.1. As the values can was be converted back after putting it through the model. But it may be more easier to normalize specific columns. Rather than whole dataset. As the values maybe easier to understand. And you don’t feel the other columns would suffer much if they are not normalized either.

Like most data science. The answer to all this is test and find out.

If you try normalizing separate columns. Then the whole dataset. See which results give you better results. Then run with that one.

Whatever your answer. I will show you to normalise between the different options.

How to normalize one column?

For this, we use the sklearn library. Using the pre-processing functions

This is where we use MinMaxScaler.

import pandas as pdfrom sklearn import preprocessingdata = {'nums': [5, 64, 11, 59, 58, 19, 52, -4, 46, 31, 17, 22, 92]}df = pd.DataFrame(data)x = df #save dataframe in new variable min_max_scaler = preprocessing.MinMaxScaler() # Create variable for min max scaler functionx_scaled = min_max_scaler.fit_transform(x) # transfrom the data to fit the functiondf = pd.DataFrame(x_scaled) # save the data into a dataframe

Result

Now your column is now normalized. You may want to rename the column to the original name. As doing this sometimes removes the column name.

Normalizing multiple columns

Now if you want to normalize many columns. Then you don’t need to do much extra. To do this you want to create a subset of columns. You want to normalise.

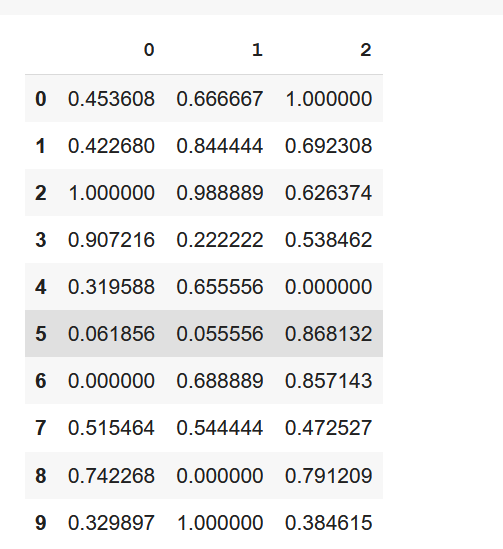

data = {'nums_1': [39,36,92,83,26,1,-5,45,67,27], 'nums_2': [57,73,86,17,56,2,59,46,-3,87], 'nums_3': [97,69,63,55,6,85,84,49,78,41]}df = pd.DataFrame(data) cols_to_norm = ['nums_2','nums_3']x = df[cols_to_norm] min_max_scaler = preprocessing.MinMaxScaler()x_scaled = min_max_scaler.fit_transform(x)df = pd.DataFrame(x_scaled)

You can merge the columns back into the original dataframe if you want to.

Normalising the whole dataset

This is the simplest one. And probably something you already do.

Similar to the normalisation of the first column. We just use the whole data frame instead.

x = dfmin_max_scaler = preprocessing.MinMaxScaler()x_scaled = min_max_scaler.fit_transform(x)df_normalized = pd.DataFrame(x_scaled)Now we have normalised a dataframe.

Now you can go on your merry way.