Creating an English to Shakespeare Translation AI

This project was supposed to do have a lot more bells and whistles, not just a simple translation model. But as the time investment started getting bigger and bigger. I had to cut my losses.

The model I created translates normal English into Shakespeare English. But the original idea was to generate Eminem lyrics written in a Shakespeare style. A bit much right? 😅

While I may start working on the original features of the project later on. I think it’s a good idea to show the progress I have right now.

Starting The Project First Time Round

The first step I did was working on the Shakespeare translation element. I did a fair amount of googling trying to find projects similar to mine. So I have an idea of what others did for their projects. I eventually found a project which translates Shakespeare English and normal English. The most important part I found was their data. A corpus of various Shakespeare plays in Shakespeare English and normal English.

Now, this is where I drove myself into a ditch. I knew for the translations task I wanted to create an RNN but the code in GitHub was highly outdated. So wanted to find another tutorial that I could use for the model. I was able to find some. But lead to more pre-processing work.

The first step was knowing what type of data I was dealing with. Because the data was not the normal txt file. It took me a while to even work out how to access the file. I was able to access it by converting it into a normal txt file. Little did I know that caused me more problems down the line.

As I was working with a language translation model. I needed the data to be parallel in some type of way. As the words need to match from one list to another list. The problem is when I was doing previews of the text. The text was non-aligned and I was never able to fix it. This pre-processing period added multiple hours to the project. Only to lead to a dead end.

Trying to encode the sequences led to me burning hours of my time. I tried to do it in various ways. Using NLP libraries like Spacy. To using more minor libraries like PyTorch-NLP. To finally following the tutorial’s Keras library. When I eventually found workarounds it led to big memory issues. As it was trying to encode the whole dataset. Even using small batches it still increased pre-processing by a high amount.

The main issues stemmed from me doing a hack job pre-processing the data. Using various libraries conflicting with each other leading to data type errors, memory issues and high pre-processing time. As I wanted to create my model using Pytorch. Using other libraries like Keras was for pre-processing. Not for modelling. But the conflict between half doing the tutorial and my customisations lead to more problems.

A new approach

So, I decided to abandon ship and create a new notebook. Using a new model from a Pytorch tutorial and accessing the files directly without converting them to a text file.

From the previous notebook. I learnt you can access the snt files without converting them into a txt file. Also, I tried using various other language translation code. But led to long pre-processing times, memory issues or long training times.

In the new notebook, I imported all the data files GitHub repo produced. By following the repo’s instructions and updating the code. The pre-processing was a lot more organised as I learnt a few mistakes from the previous notebook. While most of the data files did not get used. It was easier to access the data that I needed and easier to make changes to them.

The processing of the text was aligned correctly. Fixing the main problem of the last notebook.

#Aglined dev and train files

modern_dev_aligned_path = '/content/cloned-repo/cache/all_modern.snt.aligned_dev'

modern_train_aligned_path = '/content/cloned-repo/cache/all_modern.snt.aligned_train'

modern_dev_aligned_file = open(modern_dev_aligned_path, "r")

modern_train_aligned_file = open(modern_train_aligned_path, "r")

content_modern_dev_aligned = modern_dev_aligned_file.read()

content_modern_train_aligned = modern_train_aligned_file.read()

modern_dev_aligned_list = content_modern_dev_aligned.split("\n")

modern_train_aligned_list = content_modern_train_aligned.split("\n")

modern_dev_aligned_file.close()

modern_train_aligned_file.close()

print(modern_dev_aligned_list[:10])

print('\n ----------- \n')

print(modern_train_aligned_list[:10])

['It’s not morning yet.', 'Believe me, love, it was the nightingale.', 'It was the lark,

-----------

['I have half a mind to hit you before you speak again.', 'But if Antony is alive, healthy, friendly with Caesar,#Aglined shak dev and train files

shak_dev_aligned_path = '/content/cloned-repo/cache/all_original.snt.aligned_dev'

shak_train_aligned_path = '/content/cloned-repo/cache/all_original.snt.aligned_train'

shak_dev_aligned_file = open(shak_dev_aligned_path, "r")

shak_train_aligned_file = open(shak_train_aligned_path, "r")

content_shak_dev_aligned = shak_dev_aligned_file.read()

content_shak_train_aligned = shak_train_aligned_file.read()

shak_dev_aligned_list = content_shak_dev_aligned.split("\n")

shak_train_aligned_list = content_shak_train_aligned.split("\n")

shak_dev_aligned_file.close()

shak_train_aligned_file.close()

print(shak_dev_aligned_list[:10])

print('\n ----------- \n')

print(shak_train_aligned_list[:10])

['It is not yet near day.', 'Believe me, love, it was the nightingale.', 'It was the lark, the herald of the morn; No nightingale.' …]

-----------

['I have a mind to strike thee ere thou speak’st.', 'Yet if thou say Antony lives, …]After working out the pre-processing I started implementing the seq2seq model from Pytorch.

While following the Pytorch tutorial, I needed to do a bit more pre-processing of the data. So data can fit into the model. To do that, I needed to make my dataset was in a similar format that the tutorial’s data provides.

Like so:

I am cold. J'ai froid.I turned the separate lists of Shakespeare text and modern text into tuples. So each line of text is paired to its Shakespeare and modern text equivalent. Then I added tab characters in between the entries. To mimic the format of the tutorials data. But that did not work. So I just kept it to tuples which worked fine.

Later I came to set up the model. When I started to run the training function. It gave me an error.

This led to a wormhole of me spending days trying to debug my code. I eventually fixed the various bugs. Which was me not using the filter pairs functions. As it capped the sentences to a max length. Without that, longer sentences were entering the model. This caused errors as the code was designed to take in sentences only of the max length variable.

MAX_LENGTH = 10

eng_prefixes = (

"i am ", "i m ",

"he is", "he s ",

"she is", "she s ",

"you are", "you re ",

"we are", "we re ",

"they are", "they re "

)

def filterPair(p):

return len(p[0].split(' ')) < MAX_LENGTH and \

len(p[1].split(' ')) < MAX_LENGTH and \

p[1].startswith(eng_prefixes)

def filterPairs(pairs):

return [pair for pair in pairs if filterPair(pair)]The only thing I changed was the use of eng_prefixes as it was not relevant to my data. And reduced my dataset to two sentences which was non-usable for training.

After that, I just had to fix a few minor issues plotting charts and saving the weights.

The results after a few attempts:

> You’re making me go off-key.

= Thou bring’st me out of tune.

< What me me here. <EOS>

> Go away!

= Away!

< Away! <EOS>

> Gentlemen, we won’t stay here and anger you further.

= Gentlemen both, we will not wake your patience.

< Tis we will not not you your <EOS>

> A little bit.

= A little, by your favor.

< A good or <EOS>

> You were right the first time.

= You touched my vein at first.

< You do the the the <EOS>

> Where are these lads?

= Where are these lads?

< Where are these these <EOS>

> You’ve heard something you shouldn’t have.

= You have known what you should not.

< You have said you have you true. <EOS>

> Or else mismatched in terms of years;— Oh spite!

= Or else misgraffed in respect of years— O spite!

< Or is a dead, of of of <EOS>

> Oh why, nature, did you make lions?

= O wherefore, Nature, didst thou lions frame?

< O wherefore, Nature, didst thou didst <EOS>

> Come down, look no more.

= Come down, behold no more.

< Come weep no more. <EOS>

input = I go to work.

output = I go to your <EOS>The results of this model have a lot to be desired. But I’m happy to get a working model up and running. I can improve the results even more by increasing iterations and increasing the data sent to the model. The Google Colab GPU allows me to get a decent amount of training done. But there is a bug which states runtime has stopped while the model is still training. Luckily the runtime comes back after training. But I don’t know the times the runtime does not come back, therefore, cutting off my training.

Find this Project Interesting?

Get project updates and ML insights straight to your inbox

So I can make the project slightly more visual. I created a Streamlit app where the user can input text and the translation will be returned. I honestly wanted it to look like google translate. But I don’t know how to do that.

I had to find a good example of a translation project. As I never used Streamlit before. I was able to find this repo. I used this as the basis for my Streamlit app.

I first needed to load this repo to launch its Streamlit app. So I can get a feel on how the Streamlit app works so I will have an idea how to edit the repo for my model.

Loading the Streamlit app took a lot of work. Because Streamlit did not install in my local machine in the beginning. So I opted to use Google Colab. But because Streamlit works by routing via localhost. Which I can’t do directly using Google Colab. I had to find a way to host local addresses inside Google Colab.

I found this medium article. Which suggested I used the Ngrok service to create a tunnel to route the streamlit local address to a public URL.

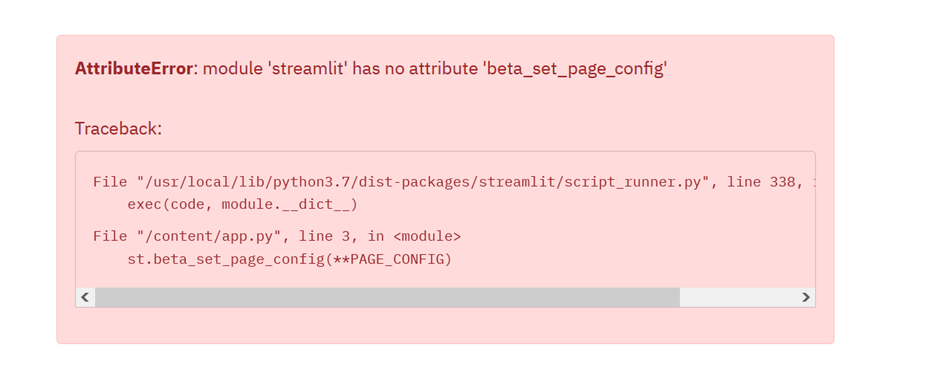

After a bit of fiddling around, I was able to run the app. The only problem is that python started giving me errors in Streamlit. Which looked like this:

After a few fixes I was able to get the repo’s Streamlit app running:

Now I had to replace the repo’s TensorFlow model with my Pytorch model. I knew this was going to be a daunting task. And I was proved right. As I was hit with numerous errors and roadblocks when setting it up.

One of the most time-consuming tasks was loading objects I had from the notebook into the Streamlit app. As I had pickled objects this lead to errors because it was from the __main__ namespace. So I had to create a custom unpickler class.

Loading the model was easier and I was able to test it on my local machine first. Loading the model on the Google Colab version led to some issues but I was able to fix them. As these were minor issues like incorrect paths to the objects and model and making sure the model loaded on a CPU device rather than a GPU device.

Afterwards, I was able to get some results:

Later on, I added a random pairs button that would show multiple examples in one go:

Adding the random pairs feature took a bit of adjusting. As multiple errors came up with the decoding of the text.

After I fixed the encoding issues I moved on to implementing the attention matrices.

Conclusion

I mentioned this at the beginning of the blog post. This project had a much bigger scope. But had to be cut. So, I may implement the second part of this project later on. Which was generating Eminem lines and feeding them into the Shakespeare translation model.

After this project, I want to do a lot more reading about RNNs. Because I don’t think my knowledge is up to scruff. So I would do various things like implementing RNNs using NumPy. Implementing some RNN related papers. And learning the maths required to understand the topic. Hopefully taking these actions should improve my understanding of RNNs and deep learning in general.

If you found this article interesting, then check out my mailing list. Where I write more stuff like this

Image classifier for Oolong tea and Green tea

Developing the Dataset

In this project, I will be making an image classifier. My previous attempts a while ago I remember did not work. To change it up a bit, I will be using the Pytorch framework. Rather than TensorFlow. As this will be my first time using Pytorch. I will be taking a tutorial before I begin my project. The project is a classifier that spots the difference between bottled oolong tea and bottled green tea.

The tutorial I used was PyTorch's 60 min blitz. (It did take me more than 60 mins to complete though). After typing out the tutorial I got used to using Pytorch. So I started moving on the project. As this will be an image classifier. I needed to get a whole lot of images into my dataset. First stubbed upon a medium article. Which used a good scraper. But even after a few edits, it did not work.

So I moved to using Bing for image search. Bing has an image API you can use. Which makes it easier to collect images compared to google. I used this article from pyimagesearch. I had a few issues with the API in the beginning. As the endpoints that Microsoft gave me did not work for the tutorial. After looking around and a few edits I was able to get it working.

But looking at the image folder gave me this:

After looking through the code I noticed that the program did not produce new images. But changed images to “000000”. This was from not copying the final section of code from the blog post. Which updated a counter variable.

Now I got the tutorial code to work we can try my search terms. To create my dataset. First I started with green tea. So I used the term "bottle green tea". Which the program gave me these images:

Afterwards, I got oolong tea, by using the term “bottle oolong tea”.

Now I had personally go through the dataset myself. And delete any images that were not relevant to the class. The images I deleted looked like this:

This is because we want the classifier to work on bottled drinks. So leaves are not relevant. Regardless of how tasty they are.

They were a few blank images. Needless to say, there are not useful for the image classifier.

Even though this image has a few green tea bottles. It also has an oolong tea bottle so this will confuse the model. So it's better to simplify it to having only a few green tea bottles. Rather than a whole variety which is not part of a class.

After I did that with both datasets. I was ready to move on to creating the model. So went to Google Collab and imported Pytorch.

As the dataset has less than 200 images. I thought it will be a good idea to apply data augmentation. I first found this tutorial which used Pytorch transformations.

When applying the transformation, it fell into a few issues. One it did not plot correctly, nor did it recognize my images. But I was able to fix it

The issues stemmed from not slicing the dataset correctly. As ImageFolder(Pytorch helper function) returns a tuple not just a list of images.

Developing the model

After that, I started working on developing the model. I used the CNN used in the 60-minute blitz tutorial. One of the first errors I dealt with was data not going through the network properly.

shape '[-1, 400]' is invalid for input of size 179776

I was able to fix this issue by changing the kernel sizes to 2 x 2. And changed the feature maps to 64.

self.fc1 = nn.Linear(64 * 2 * 2, 120) x = x.view(-1, 64 * 2 * 2)Straight afterwards I fell into another error:

ValueError: Expected input batch_size (3025) to match target batch_size (4).

This was fixed by reshaping the x variable again.

x = x.view(-1, 64 * 55 * 55) By using this forum post.

Then another error 😩.

RuntimeError: size mismatch, m1: [4 x 193600], m2: [256 x 120] at /pytorch/aten/src/TH/generic/THTensorMath.cpp:41

This was fixed by changing the linear layer again.

self.fc1 = nn.Linear(64 * 55 * 55, 120) Damn, I did not know one dense layer can give me so many headaches.

After training. I needed to test the model. I did not make the test folder before making the model. (rookie mistake). I made it quickly afterwards by using the first 5 images of each class. This is a bad thing to do. This can contaminate the data. And lead to overfitting. But I needed to see if the model was working at the time.

I wanted to plot one of the images in a test folder. So I borrowed the code from the tutorial. This led to an error. But fixed it by changing the range to one. Instead of 5. This was because my model only has 2 labels. (tensor[0] and tensor[1]) Not 4.

When loaded the model. It threw me an error. But this was fixed by resizing the images in the test folder. After a few runs of the model, I noticed that it did not print the loss. So edited the code to do so.

if i % 10 == 0:

print('[%d, %d] loss: %.5f' %

(epoch + 1, i + 1, running_loss / 10))

running_loss = 0.0As we can see the loss is very high.

When I tested the model on the test folder it gave me this:

Which means it’s at best guessing. I later found it was because it picked every image as green tea. With 5 images with a green tea label. This lead it to be right 50% of the time.

So this leads me to the world of model debugging. Trying to reduce the loss rate and improve accuracy.

Debugging the model

I started to get some progress of debugging my model when I found this medium article

The first point the writer said was to start with a simple problem that is known to work with your type of data. Even though I thought I was using a simple model designed to work with image data. As I was borrowing the model from the Pytorch tutorial. But it did not work. So opted for a simpler model shape. Which I found from a TensorFlow tutorial. Which only had 3 convolutional layers. And two dense layers. I had to change the final layer parameters as they were giving me errors. As it was designed for 10 targets in mind. Instead of 2. Afterwards, I fiddled around with the hyperparameters. With that, I was able to get the accuracy of the test images to 80% 😀.

Accuracy of the network on the 10 test images: 80 %108

Testing the new model

As the test data set was contaminated because I used the images from the training dataset. I wanted to restructure the test data sets with new images. To make sure the accuracy was correct.

To restructure it I did it in the following style:

https://stackoverflow.com/a/60333941

While calling the test and train dataset separately.

train_dataset = ImageFolder(root='data/train')test_dataset = ImageFolder(root='data/test')

With the test images, I decided to use Google instead of Bing. As it gives different results. After that, I tested the model on the new test dataset.

Accuracy of the network on the 10 test images: 70 %107

As it was not a significant decrease in the model learnt something about green tea and oolong tea.

Using the code from the Pytorch tutorial I wanted to analyse it even further:

Accuracy of Green_tea_test : 80 %Accuracy of oolong_tea_test : 60 %

Plotting the predictions

While I like this. I want the program to tell me which images it got wrong. So, I went to work trying to do so. To do this, I stitched up the image data with the labels, in an independent list.

for i, t, p, in zip(img_list, truth_label, predicted_label):

one_merge_dict = {'image': i, 'truth_label': t, 'predicted_label': p}

merge_list.append(one_merge_dict)

print(merge_list)

On my first try I got this:

As we can see its very cluttered and shows all the images. To clear it out I removed unneeded text.

Now I can start separating the images from right to wrong.

I was able to do this by using a small if statement

Now the program correctly plots the images with the incorrect label. But the placement of the images is wrong. This is because it still uses the placement of the other correct images. But the If statement does not plot them.

I corrected it by changing the loop:

I wanted to get rid of the whitespace, so I decided to change the plotting of images.

ax = plt.subplot(1, 4, i + 1)

fig = plt.figure(figsize=(15,15))

Now I have an idea, what the model got wrong. The first sample the green tea does not have the traditional green design. So it’s understandable that is got it wrong. The second sample. Was oolong tea but misclassified it as green tea. My guess is the bottle as has a very light colour tone. Compared to the golden or orange tone oolong bottles in the training data. Then the third example, where the bottle has the traditional oolong design with an orange colour palette. But the model misclassified it with green tea. I guess that the leaf on the bottle affected the judgement of the model. Leading it to classify it as green tea.

Now I have finished the project. This is not to say that I may not come back to this project. As an addition to the implementation side could be made. Like having a mobile app that can detect oolong or green tea. With your phone's camera. Or a simple web app, that users can upload their bottled tea images. And the model can classify your image on the website.

Predicting Flooding with Python

Getting Rainfall Data and Cleaning

For this project, I will make a model that will show long term flooding risk in an area. Related to climate change and machine learning, which I have been writing a lot about recently. The idea was to predict if an area has a higher risk of flooding in 10 years. The general idea to work this out was to get rainfall data. Then work out if the rainfall exceeded land elevation. After that, the area can be counted as flooded or not.

To get started I had to find rainfall data. Luckily, it was not too hard. But the question was, what rainfall data I wanted to use. First, I found the national rainfall data (UK). Which looked very helpful. But as the analysis will be done by a geographic basis. I decided that I will use London rainfall data. When I got the rainfall data it looked like this:

Some of the columns gave information about soil moisture, which was not relevant to the project. So I had to get rid of them. Also, as it was a geographic analysis. I decided to pick the column that would be closest to the general location I wanted to map. So, I picked Lower Lee rainfall. As I will analyse East London.

To complete the data wrangling I used pandas. No surprise there. To start, I had to get rid of the first row in the dataframe. As they work as the second header in the dataframe. This makes sense as the data was meant for an excel spreadsheet.

I used this to get rid of the first row:

df = df[1:]After that, I had to get rid of the locations I was not going to use. So, I used pandas iloc function to slice through a significant number of columns in the dataframe.

df = df.drop(df.iloc[:, 1:6], axis=1)After that, I used the dataframe drop function to get rid of the columns by name.

df = df.drop(['Roding', 'Lower Lee.1', 'North Downs South London.1', 'Roding.1'], axis=1)Now, before I show you the other stuff I did. I fell into some errors when trying to analyse or manipulate the contents of the dataframe. To fix these issues that I fell into. I changed the date column into Pandas DateTime, with the option of parsing the date first. Due to pandas using the American date system. Then changed the Lower Lee column into a float type. This had to be done as the first row which I sliced earlier. Changes the data type of the columns into non-numeric data types. After I did all of this I can go back into further analysis.

To make the analysis more manageable, I decided to sum up the rainfall to a monthly basis. Rather than a daily basis. As I will have to deal with a lot of extra rows. And having monthly rainfall makes it easier to see changes in rainfall from a glance. To do this I had to group the dataframe into monthly data. This is something that I was stuck for a while, but I was able to find the solution.

Initially, I had to create a new dataframe, that grouped the DateTime column by month. This is why I had to change the datatype from earlier. Then I used the dataframe aggregate function. To sum the values. Then after that, I used the unstack function which pivots the index labels. Thirdly I used reset_index(level=[0,1]) to revert the multi-index into a single index dataframe. Then dropped the level_0 column. Then renamed the rest of columns date and rain.

Analysing the Data

One of the major issues that popped up was the data type of the date column. After tonnes of digging around in stack overflow, I found the solution was to convert it to a timestamp then converted back into a DateTime format. I think this has to do with the changed dataframe into a monthly dataframe so it must have messed up the data type which is why I had to change it again.

A minor thing I had to adjust was the index because when I first plotted the graphs the forecast did not provide the date only providing an increasing numerical number. So, I went to the tutorial’s notebook and her dataframe had the date as the index. So, I changed my dataset, so the index contains the dates so when the forecast is plotted the dates are shown on the x-axis.

Now for the analysis. This is a time-series analysis as we are doing forecasting. I found this article here which I followed. I used the statsmodels package. Which helps provide models for statistical analysis. First, we did a decomposition which separated the dataframe into a trend, seasonal and residual components.

Next, the tutorial asks us to check if the time series is stationary. In the article, it's defined as “A time series is stationary when its statistical properties such as mean, variance, and autocorrelation are constant over time. In other words, the time series is stationary when it is not dependent on time and not have a trend or seasonal effects.”

To check if the data is stationary, we used autocorrelation function and partial autocorrelation function plots.

There is a quick cut off the data is stationary. The Autocorrelation and Partial autocorrelation functions give information about the reliance of time series values.

Now we used another python package called pmdarima. Which will help me decide my model.

import pmdarima as pm model = pm.auto_arima(new_index_df_new_index['Rain'], d=1, D=1, m=12, trend='c', seasonal=True, start_p=0, start_q=0, max_order=6, test='adf', stepwise=True, trace=True)

All of the settings were taken from the tutorial. I will let the tutorial explain the numbers:

“Inside auto_arima function, we will specify d=1 and D=1 as we differentiate once for the trend and once for seasonality, m=12 because we have monthly data, and trend='C' to include constant and seasonal=True to fit a seasonal-ARIMA. Besides, we specify trace=True to print status on the fits. This helps us to determine the best parameters by comparing the AIC scores.”

After than I spilt the data into train and test batches.

train_x = new_index_df_new_index[:int(0.85*(len(new_index_df_new_index)))]test_x = new_index_df_new_index[int(0.85*(len(new_index_df_new_index))):]When Splitting the data for the first time I used SciKit Learn’s train_test_split function to split the data. But this led to some major errors later on when plotting the data so I'm using the tutorial method.

Then we trained a SARIMAX based on the parameters produced from earlier.

from statsmodels.tsa.statespace.sarimax import SARIMAX

model = SARIMAX(train_x['Rain'],

order=(2,1,0),seasonal_order=(2,1,0,12))

results = model.fit()

results.summary()Plotting the forecast

Now we can start work on forecasting as we now have a trained model.

forecast_object = results.get_forecast(steps=len(test_x))mean = forecast_object.predicted_meanconf_int = forecast_object.conf_int()dates = mean.index

These variables used to help us plot the forecast. The forecast is as long as the test dataset. The mean is the average prediction. The confidence interval gives us a range where the numbers lie. And dates provide an index so we can plot the date.

plt.figure(figsize=(16,8))

df = new_index_df_new_index plt.plot(df.index, df, label='real')

plt.plot(dates, mean, label='predicted')

plt.fill_between(dates, conf_int.iloc[:,0], conf_int.iloc[:,1],alpha=0.2)

plt.legend() plt.show()

This is example of an in-sample forecast. Now lets see how we make a out-sample forecast.

pred_f = results.get_forecast(steps=60)

pred_ci = pred_f.conf_int()

ax = df.plot(label='Rain', figsize=(14, 7))

pred_f.predicted_mean.plot(ax=ax, label='Forecast')

ax.fill_between(pred_ci.index,

pred_ci.iloc[:, 0],

pred_ci.iloc[:, 1], color='k', alpha=.25)

ax.set_xlabel('Date')

ax.set_ylabel('Monthly Rain in lower lee')

plt.legend()

plt.show()

This is forecasting 60 months into the future.

Now we have forecasting data. I needed to work on which area can get flooded.

Getting Elevation Data

To work out areas that are at risk of flooding I had to find elevation data. After googling around. I found that the UK government provide elevation data of the country. Using LIDAR. While I was able to download the data. I worked out that I did not have a way to view the data in python. And I may have to pay and learn a new program called ArcGIS. Which is something I did not want to do.

So I found a simpler alternative using Google Maps API elevation data. Where you can get elevation data of an area. Using coordinates. I was able to access the elevation data using the Python package requests.

import requests

r = requests.get('https://maps.googleapis.com/maps/api/elevation/json?locations=39.7391536,-104.9847034&key={}'.format(key))

r.json()

{'results': [{'elevation': 1608.637939453125,

'location': {'lat': 39.7391536, 'lng': -104.9847034},

'resolution': 4.771975994110107}],

'status': 'OK'}Now we need to work out when the point will get flooded. So using the rainfall data we compare the difference between elevation and rainfall. And if the rain passes elevation then the place is underwater.

import json

r = requests.get('https://maps.googleapis.com/maps/api/elevation/json?locations=51.528771,0.155324&key={}'.format(key))

r.json()

json_data = r.json()

print(json_data['results'])

elevation = json_data['results'][0]['elevation']

print('elevation: ', elevation )

rainfall_dates = []

for index, values in mean.iteritems():

print(index)

rainfall_dates.append(index)

print(rainfall_dates)

for i in mean:

# print('Date: ', dates_rain)

print('Predicted Rainfall:', i)

print('Rainfall vs elevation:', elevation - i)

print('\n')Predicted Rainfall: 8.427437412467206

Rainfall vs elevation: -5.012201654639448

Predicted Rainfall: 40.91480530998025

Rainfall vs elevation: -37.499569552152494

Predicted Rainfall: 26.277342698245548

Rainfall vs elevation: -22.86210694041779

Predicted Rainfall: 16.720892909866357

Rainfall vs elevation: -13.305657152038599As we can see if the monthly rainfall drops all in one day. Then the area will get flooded.

diff_rain_ls = []

for f, b in zip(rainfall_dates, mean):

print('Date:', f)

print('Predicted Rainfall:', b)

diff_rain = elevation - b

diff_rain_ls.append(diff_rain)

print('Rainfall vs elevation:', elevation - b)

print('\n')

# print(f, b)This allows me to compare the dates with rainfall vs elevation difference.

df = pd.DataFrame(list(zip(rainfall_dates, diff_rain_ls)),

columns =['Date', 'diff'])

df.plot(kind='line',x='Date',y='diff')

plt.show()I did the same thing with the 60-month forecast

rainfall_dates_60 = []

for index, values in mean_60.iteritems():

print(index)

rainfall_dates_60.append(index)

diff_rain_ls_60 = []

for f, b in zip(rainfall_dates_60, mean_60):

print('Date:', f)

print('Predicted Rainfall:', b)

diff_rain_60 = elevation - b

diff_rain_ls_60.append(diff_rain_60)

print('Rainfall vs elevation:', elevation - b)

print('\n')In the long term, the forecast says they will be less flooding. This is likely due to how the data is collected is not perfect and short timespan.

How the Project Fell Short

While I was able to work out the amount of rainfall to flood an area. I did not meet the goal of showing it on to a map. I could not work out the LIDAR data from earlier. And other google map packages for Jupiter notebooks did not work. So I only the coordinates and the rainfall amount.

Wanted to make something like this:

For the reasons I mentioned earlier, I could not do it. The idea was to have the map zoomed in to the local area. While showing underwater properties and land.

I think that’s the main bottleneck. Getting a map of elevation data which can be manipulated in python. As from either, I could create a script that could colour areas with a low elevation.

Why you should NOT use this model

While I learnt some stuff with the project. I do think they some major issues on how I decided which areas are at risk. Just calculating monthly rainfall and finding the difference from the elevation is arbitrary. What correlation does monthly rainfall effect if rainfall pores 10X more in a real flood? This is something I started to notice once I started going through the project. Floods happen (in the UK) from flash flooding. So a month’s worth of rain pours in one day. They will be some correlation with normal rainfall. The other data points that that real flood mappers use, like simulating the physics of the water. To see how the water will flow and affect the area. (Hydrology). Other data points can include temperature and snow. Even the data I did have could have been better. The longest national rainfall data when back to the 70s. I think I did a good job by picking the local rain gauge from the dataset. (Lower Lee). I wonder if it would have been better to take the average or sum of all the gauges to have a general idea of rainfall of the city.

So other than I did not map the flooding. This risk assessment is woefully inaccurate.

If you liked reading this article, please check out my other blog posts:

Failing to implement my first paper

How I created an API that can work out your shipping emissions